Get started building your own client that can integrate with all MCP servers.In this tutorial, you’ll learn how to build a LLM-powered chatbot client that connects to MCP servers. It helps to have gone through the Server quickstart that guides you through the basic of building your first server.Python#

System Requirements#

Before starting, ensure your system meets these requirements:Latest Python version installed

Latest version of uv installed

Setting Up Your Environment#

First, create a new Python project with uv:Setting Up Your API Key#

Create a .env file to store it:Add your key to the .env file:Add .env to your .gitignore:Make sure you keep your ANTHROPIC_API_KEY secure!Creating the Client#

Basic Client Structure#

First, let’s set up our imports and create the basic client class:Server Connection Management#

Next, we’ll implement the method to connect to an MCP server:Query Processing Logic#

Now let’s add the core functionality for processing queries and handling tool calls:Interactive Chat Interface#

Now we’ll add the chat loop and cleanup functionality:Main Entry Point#

Finally, we’ll add the main execution logic:You can find the complete client.py file here.Key Components Explained#

1. Client Initialization#

The MCPClient class initializes with session management and API clients

Uses AsyncExitStack for proper resource management

Configures the Anthropic client for Claude interactions

2. Server Connection#

Supports both Python and Node.js servers

Validates server script type

Sets up proper communication channels

Initializes the session and lists available tools

3. Query Processing#

Maintains conversation context

Handles Claude’s responses and tool calls

Manages the message flow between Claude and tools

Combines results into a coherent response

4. Interactive Interface#

Provides a simple command-line interface

Handles user input and displays responses

Includes basic error handling

5. Resource Management#

Proper cleanup of resources

Error handling for connection issues

Graceful shutdown procedures

Common Customization Points#

1.

Modify process_query() to handle specific tool types

Add custom error handling for tool calls

Implement tool-specific response formatting

2.

Customize how tool results are formatted

Add response filtering or transformation

3.

Add a GUI or web interface

Implement rich console output

Add command history or auto-completion

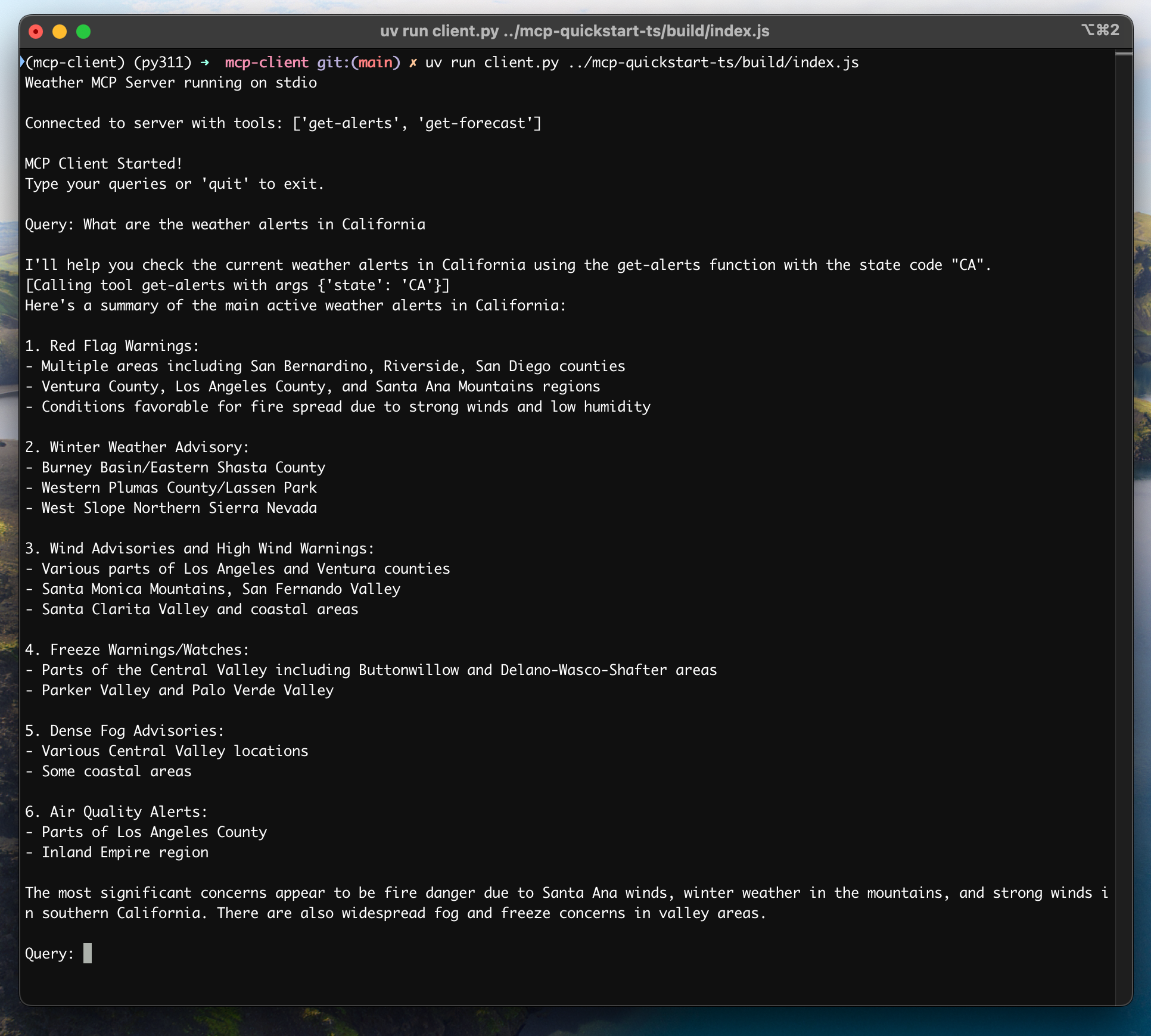

Running the Client#

To run your client with any MCP server:If you’re continuing the weather tutorial from the server quickstart, your command might look something like this: python client.py .../weather/src/weather/server.py1.

Connect to the specified server

3.

Start an interactive chat session where you can:Get responses from Claude

How It Works#

1.

The client gets the list of available tools from the server

2.

Your query is sent to Claude along with tool descriptions

3.

Claude decides which tools (if any) to use

4.

The client executes any requested tool calls through the server

5.

Results are sent back to Claude

6.

Claude provides a natural language response

7.

The response is displayed to you

Best practices#

1.

Always wrap tool calls in try-catch blocks

Provide meaningful error messages

Gracefully handle connection issues

2.

Use AsyncExitStack for proper cleanup

Close connections when done

Handle server disconnections

3.

Store API keys securely in .env

Validate server responses

Be cautious with tool permissions

Troubleshooting#

Server Path Issues#

Double-check the path to your server script is correct

Use the absolute path if the relative path isn’t working

For Windows users, make sure to use forward slashes (/) or escaped backslashes () in the path

Verify the server file has the correct extension (.py for Python or .js for Node.js)

Response Timing#

The first response might take up to 30 seconds to return

This is normal and happens while:Claude processes the query

Subsequent responses are typically faster

Don’t interrupt the process during this initial waiting period

Common Error Messages#

FileNotFoundError: Check your server path

Connection refused: Ensure the server is running and the path is correct

Tool execution failed: Verify the tool’s required environment variables are set

Timeout error: Consider increasing the timeout in your client configuration

Modified at 2025-03-12 06:39:40